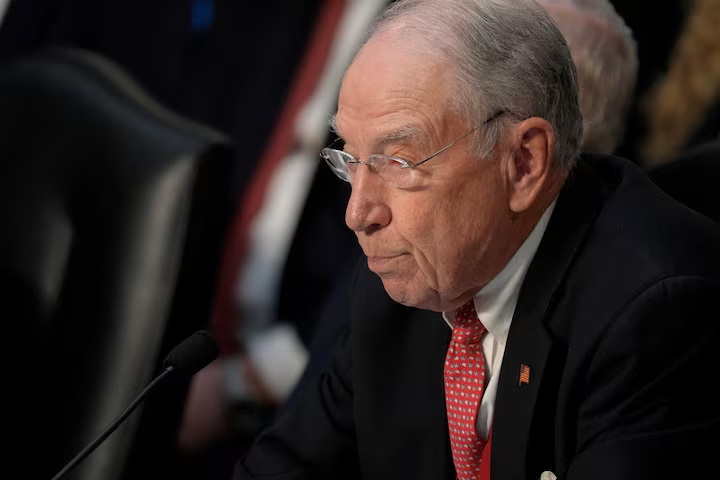

Two U.S. federal judges have acknowledged that artificial intelligence tools were used by their staff to draft rulings that later proved to contain factual and procedural errors. The admission came in letters to U.S. Senate Judiciary Committee Chairman Chuck Grassley, who had requested explanations after noticing what he described as “error-ridden” decisions.

Judges confirm unauthorized AI use

According to Reuters, U.S. District Judge Julien Xavier Neals of New Jersey and U.S. District Judge Henry Wingate of Mississippi both said the flawed rulings bypassed their courts’ usual review processes. Neals explained that a law school intern in his Newark chambers used OpenAI’s ChatGPT without permission or disclosure while preparing a draft opinion in a securities case.

Neals told the committee that the draft decision was mistakenly released but “withdrawn as soon as it was brought to the attention of my chambers.” He said his office has since adopted a formal policy regulating AI use and strengthened its internal review process.

Meanwhile, Wingate revealed that a law clerk in his Jackson court relied on the AI platform Perplexity “as a foundational drafting assistant” to synthesize public case information. The draft ruling was uploaded in error, which Wingate described as “a lapse in human oversight.” He later removed and replaced the original order in a civil rights lawsuit, calling the errors “clerical” at the time.

Grassley urges stronger AI safeguards

In a statement quoted by Reuters, Senator Grassley praised the judges for their transparency but called for stricter rules across the federal judiciary. “Each federal judge, and the judiciary as an institution, has an obligation to ensure the use of generative AI does not violate litigants’ rights or prevent fair treatment under the law,” he said.

Grassley had raised concerns earlier this month after attorneys in both cases identified factual inaccuracies in the original rulings. His inquiry marks one of the first formal congressional efforts to examine how AI is being used inside federal courts.

Growing concern over AI misuse in law

Courts across the United States have increasingly confronted the misuse of AI-generated material in legal filings. Judges have imposed sanctions and fines in dozens of cases where lawyers failed to verify information produced by chatbots or other AI tools.

Both Neals and Wingate have implemented new safeguards to prevent similar mistakes, according to their letters. Their admissions add to a growing debate over how the U.S. judiciary should balance efficiency and accountability as artificial intelligence becomes more embedded in legal work.